Amazon's Dynamo - Foundations of Cloud NoSQL

Taking a look at the Dynamo white paper that laid the foundations of DynamoDb

Before we get started, a quick clarification: this blog focuses on Dynamo, the distributed key-value store Amazon used internally, which laid the groundwork for DynamoDB. While both share a similar name and concept, they are distinct systems. I'll be covering DynamoDB in a follow-up post, so stay tuned for that.

With that out of the way, let’s dive into the core of the Dynamo system.

The Why

I think to truly develop an appreciation of a system, we must look at the conditions that necessitated its creation. When the paper was released in 2007, some of Amazon’s use cases didn’t align with the traditional distributed relational databases. This prompted Amazon to create an “always-on” distributed key-value store. Here’s why:

High Availability Over Strong Consistency: Many of Amazon's services favored systems that prioritized high availability and the ability to write data at all times—even if that meant sacrificing strong consistency. From a CAP theorem perspective: Partition Tolerance (P) is non-negotiable, since Dynamo is a distributed system. The trade-off, then, is between Consistency (C) and Availability (A). Dynamo was designed to favor availability over strict consistency - an essential choice for applications that require data stores to be always available to write.

Simple Data Access: The applications in question didn’t require complex queries. Instead, they needed to read data based on specific keys, making a simple key-value store a good fit.

One example use case cited in the paper is Amazon’s shopping cart service. This service doesn't need complicated queries—what it does need is the ability to reliably add an item to the cart every time a user clicks “Add to Cart,” regardless of any system failures that might be occurring.

The Architecture

At API interaction level, Dynamo exposed a very simple interface of get() and put() operations. This simplicity allowed users of the system to interact with it without unnecessary complexity.

Data Partitioning and Consistent Hashing:

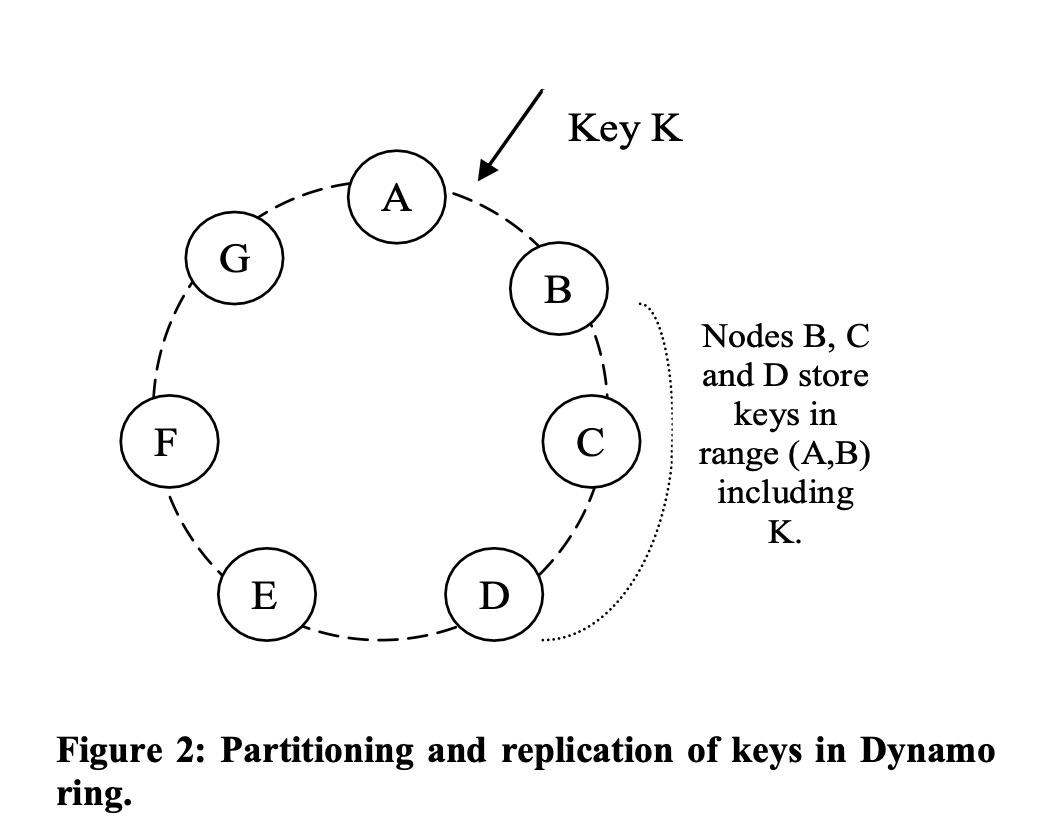

Dynamo distributes data across multiple nodes using consistent hashing. Each item is hashed, and the resulting value determines where it lands on the hash ring. Once a key’s position on the ring is identified, the key is stored on the node closest to that position in the clockwise direction. This node is called the coordinator node.

This approach has a major advantage: when nodes are added or removed, only the immediate neighbors of the affected node are impacted, minimizing disruption. However, consistent hashing can still lead to hotspots (uneven load distribution). To mitigate this, Dynamo introduces virtual nodes, where each physical node is mapped to multiple virtual spots on the hash ring, spreading the load more evenly.

Replication

To achieve high availability, Dynamo replicates each write to N-1 successor nodes in the clockwise direction on the ring (with N being a parameter configured per instance). Because nodes are virtual, the actual physical storage nodes handling replication may be fewer than N-1. In order to guarantee that the write is truly replicated, some virtual nodes in the ring may be skipped. If a coordinator node fails during a write request, the system follows the successors to find another node that can handle the write request. The write information is eventually synchronized back once the coordinator comes back up.

Data access and Write Conflicts

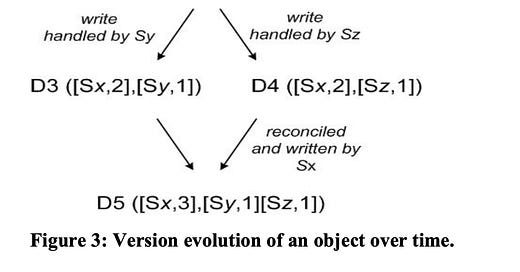

Since Dynamo sacrifices consistency in favor of availability, write conflicts are an inevitable consequence. To address this, Dynamo uses object versioning and vector clocks to track and resolve conflicts. Every write creates a new version of an object, meaning multiple versions of the same object can coexist in the system. When versions diverge—say, because two nodes accepted different writes—vector clocks are used for reconciliation. A vector clock represents a list of node and version counter pairs. The paper explains how the vector clocks help reconcile write conflicts:

If the counters on the first object’s clock are less-than-or-equal to all of the nodes in the second clock, then the first is an ancestor of the second and can be forgotten. Otherwise, the two changes are considered to be in conflict and require reconciliation.

When writing to the datastore, clients include the context they received prior to the write. This context helps generate the necessary vector clocks and define the reconciliation strategy. Reconciliation takes place on the client side to avoid rejecting writes in the event of conflicts, especially when some replicas cannot be reached. Since Dynamo is designed to be an “always-on” datastore, it is crucial to accept writes, even if there are conflicts.

For example, in step 3, you'll see that versions D3 and D4 diverged because nodes Sy and Sz processed independent writes based on the same [Sx, 2] vector clock. These divergent versions are reconciled on the client side, and the final version, D5, is written back with the updated vector clock.

Other notable features

Assumption of No Bad Actors: Dynamo’s design assumes that all nodes behave correctly, as the system operates within Amazon’s trusted network. This simplifies the system’s design and enables the use of a basic quorum-based approach for ensuring consistency.

Merkle Trees: To detect discrepancies between replicas, Dynamo uses Merkle trees. These trees enable an anti-entropy process to bring out-of-sync replicas back into harmony.

Pruning Vector Clocks: Over time, vector clocks can grow large. Dynamo includes a background process to prune these clocks and prevent them from becoming a performance bottleneck.

Quorum Reads and Writes: Dynamo uses a quorum-based model where R (read) and W (write) represent the number of nodes that must participate in a read or write operation. The system typically requires that R + W > N. This ensures that any read or write operation involves a sufficient number of nodes to guarantee availability and minimize data inconsistency. For example, setting R to 1 allows for very fast read performance because only one node needs to respond.

Client-Side Routing: Dynamo clients are responsible for routing requests directly to the appropriate nodes. This minimizes latency by avoiding additional network hops that would occur if requests passed through a request router or load balancer. Periodically, clients fetch an updated view of Dynamo’s membership state, which helps them determine the correct set of nodes for a given key.

Takeaways

Amazon measures the success of Dynamo by evaluating its 99.9th percentile latency which reflects the extreme focus on performance from the perspective of a user’s experience. Dynamo is an extremely efficient system, built by combining established distributed systems principles. Its efficiency is evident in every part of its design.

However, we must keep in mind, Dynamo requires teams to carefully consider their access patterns and configuration choices. Teams need to understand their reconciliation strategies, decide on the number of replicas, and be ready to accept the trade-off between availability and consistency. This trade-off is a hard decision to make, especially when strong consistency is typically preferred in most systems.

Dynamo was designed for use cases where an always-on, low-latency datastore is critical. Amazon’s shopping cart service is a prime example where Dynamo’s trade-offs make sense. While the system was built for a specific use case, it’s a beautifully engineered solution that laid the foundation for NoSQL databases in the cloud.

Dynamo went on to influence DynamoDB, Amazon’s fully-managed cloud database service, and also inspired other systems like Cassandra.

If you’re interested in learning more, I highly recommend reading the original paper to get a deeper understanding of Dynamo's design and the challenges it addresses.

Stay tuned for Part 2, where I’ll dive into the architecture of DynamoDB, the system that evolved from Dynamo.

Sources:

https://www.allthingsdistributed.com/files/amazon-dynamo-sosp2007.pdf